In today’s data-driven world, your business needs to make informed, impactful decisions quickly.

Internal and external data sources fuel our day-to-day decisions, and AI is making it easier for us to summarize and get an overall picture of ever-larger datasets.

However, data is often scattered across silos and lacks accessibility, trustworthiness, and timeliness – slowing down the decision-making and giving your competitors a head start.

That’s where data preparation comes into play – the often-overlooked piece that helps make or break your ability to gain any meaningful insights.

What the heck is data preparation?

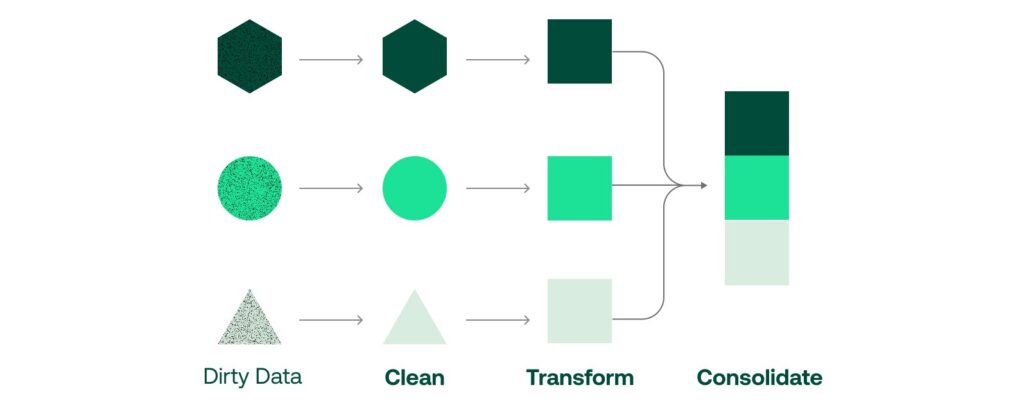

In a few words, I’d define data preparation as a process of consolidating, cleaning, enriching, and shaping data to achieve desired results in a meaningful, timely, and trustworthy manner.

In this blog post, I’m looking to give you an overview of the tools you can use, why data preparation is important, and the steps in data preparation.

Data Preparation Tools: Our 6 Favorites

A variety of tools are available to streamline the data preparation process, each with unique features, strengths, and costs.

This section provides a detailed breakdown of six prominent data preparation tools:

- Mammoth Analytics

- Tableau Prep

- Alteryx

- Fivetran

- Zoho DataPrep, and

- Power BI.

By examining each tool’s pros, cons, target users, and pricing, you can make more informed decisions about which data preparation software best meets your needs.

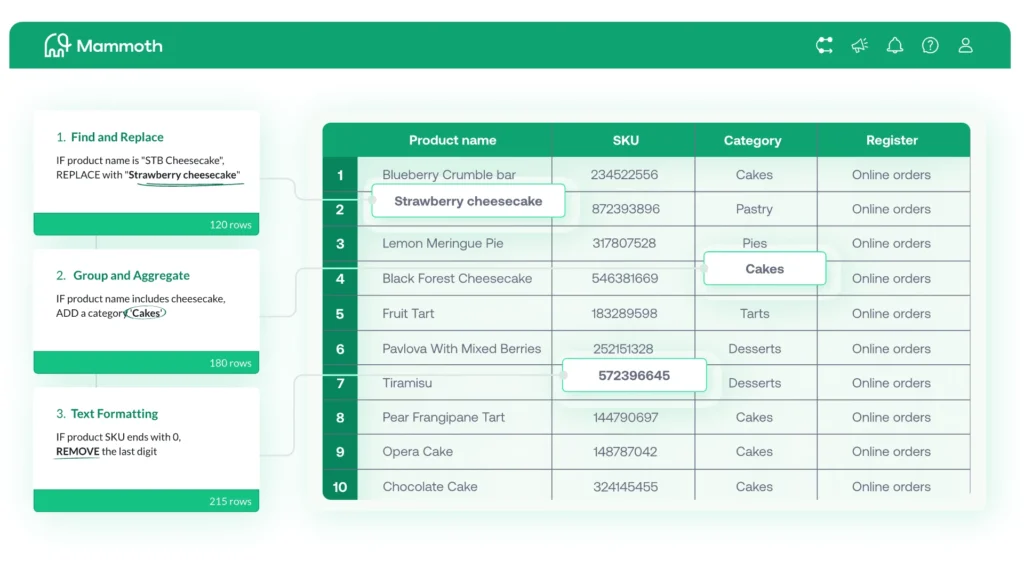

Mammoth Analytics

Mammoth is a cloud-based data preparation tool designed for simplicity and collaboration. It enables users to clean, transform, and blend disparate data without extensive technical skills.

Pros:

- Ease of use: Code-free and user-friendly interface suitable for non-technical users

- Collaboration: Supports real-time collaboration, allowing multiple users to work on data simultaneously.

- Integration: Connects to various data sources and databases

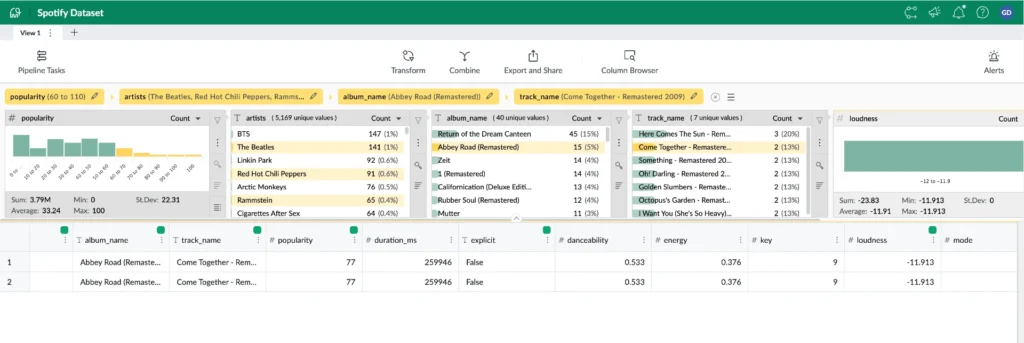

- Data discovery and exploration: Allows users to query data via a point-and-click approach, which helps in uncovering trends and anomalies without the need of learning SQL

- Data transformation: Offers comprehensive data transformation and cleaning capabilities.

- Cloud-based solution: allows for processing large volumes of data without the local storage restrictions

Cons:

- Limited advanced features: Mammoth lacks some advanced features required by more technical users or data scientists.

- Limited dashboarding capabilities: Mammoth allows users to view graphs for exploration purposes but is not designed to be a dashboarding solution.

- Cloud-based only: Offline work is not available.

Who it is meant for:

- Small to medium-sized businesses

- Departments in larger businesses

- Teams needing collaborative data preparation tools.

- Non-technical users looking for an easy-to-use platform.

Cost:

- Starts at $19/month

Are you looking for a data preparation solution? Get started and import up to 1 million rows of data for free.

Tableau Prep

Tableau Prep is part of the Tableau suite, offering tools to help users quickly clean, combine, and transform data before analysis.

It’s designed to work seamlessly with Tableau’s data visualization products.

Pros:

- Visual interface: Intuitive drag-and-drop interface.

- Integration with Tableau: Seamless integration with Tableau Desktop and Tableau Server.

- Smart recommendations: Provides intelligent suggestions for data cleaning and transformation.

- Automation: Supports automated data flow creation and scheduling.

Cons:

- Learning curve: It may require some initial learning for new users

- Limited data discovery features: To find anomalies and trends in data, users may need to use another product

- Limited standalone use: Best used in conjunction with other Tableau products.

Who it is meant for:

- Tableau users looking to streamline their data preparation processes.

- Data analysts and business intelligence professionals.

- Organizations needing integrated data prep and visualization tools.

Cost:

- Tableau Prep is included in the Tableau Creator license, which costs $70 per user per month (billed annually).

Alteryx

Alteryx is a powerful data preparation and analytics platform that allows users to blend and analyze data from multiple sources.

It is known for its extensive features and advanced analytics capabilities.

Pros:

- Comprehensive tools: Offers a wide range of data preparation, blending, and advanced analytics tools.

- Integration: Connects to numerous data sources, including databases, cloud services, and APIs.

- Automation: Allows for the automation of complex workflows.

Cons:

- Cost: Higher price point compared to some other tools.

- Resource intensive: This can require significant computational resources for large datasets and complex processes.

- High technical learning curve: Requires someone with proficient technical skills to use

- Limited data discovery features: To find anomalies and trends in data, users may need to use another product

Who it is meant for:

- Data analysts, data scientists, and business intelligence professionals.

- Medium to large enterprises needing robust data preparation and analytics capabilities.

- Organizations with complex data environments and advanced analytics needs.

Cost:

- Alteryx Designer licenses start at approximately $5,195 per user per year. Additional costs apply to the server and other advanced features.

Fivetran

Fivetran is a cloud-based data integration tool that automates the process of connecting data sources to a data warehouse.

It emphasizes reliability and ease of use.

Pros:

- Automated pipelines: Fully automated data extraction, loading, and transformation processes.

- Schema management: Automatically adapts to changes in source schemas.

- Reliability: Ensures consistent and reliable data replication.

- Scalability: Efficiently handles large volumes of data.

Cons:

- Limited customization: Less flexibility for custom transformations compared to some other tools.

- Cost: It can be expensive for organizations with extensive data integration needs

- High technical learning curve: Requires someone with proficient technical skills to use

- Limited data discovery features: To find anomalies and trends in data, users may need to use another product

Who it is meant for:

- Data engineers and data integration specialists.

- Organizations needing reliable and automated data replication.

- Companies looking to simplify ETL processes.

Cost:

- Fivetran pricing is based on the volume of data processed. It starts at $1 per credit, with a minimum monthly spend of $100. Exact pricing can vary based on usage and specific requirements, so it is recommended that you contact Fivetran for a detailed quote.

Zoho DataPrep

Zoho DataPrep is a data preparation and management tool that helps users clean, transform, and enrich data for analysis.

Pros:

- Ease of use: Intuitive interface with drag-and-drop functionality.

- Integration: Connects to various data sources and integrates well with other Zoho products.

- Data enrichment: Provides tools for data cleaning, transformation, and enrichment.

- Automation: Supports automated workflows for recurring data preparation tasks.

Cons:

- Limited advanced features: May have fewer advanced analytics features than other tools.

- Scalability: May face challenges handling large datasets.

- Limited data discovery features: To find anomalies and trends in data, users may need to use another product

Who is it meant for:

- Small to medium-sized businesses, Zoho ecosystem users, and non-technical users needing basic data preparation.

Cost:

- Pricing starts at $40 per user per month, with a free tier available for limited usage.

Power BI

Power BI is a business analytics service by Microsoft that provides interactive visualizations and business intelligence capabilities.

Its interface allows users to create their own reports and dashboards.

Pros:

- Integration: Seamlessly integrates with Microsoft products and a wide range of data sources.

- Affordable: Competitive pricing, especially for existing Microsoft 365 users.

- Power Query: Robust data preparation capabilities within Power Query.

Cons:

- Complexity: It can be complex to learn and use all features effectively.

- Performance: Struggles with large datasets or complex transformations.

Who is it meant for:

- Business analysts, small to large enterprises, and existing Microsoft 365 users.

Cost:

- Power BI Pro is around $10 per user per month. Power BI Premium offers advanced features and capacity, starting at $4,995 per capacity per month.

Each of these tools offers unique strengths tailored to different organizational needs and technical capabilities. The choice of tool will depend on factors such as budget, technical requirements, and the complexity of the data environment.

Why is data preparation important?

Imagine you’ll have to make a decision for or against expanding your business to a new market.

Depending on the quality of your data preparation techniques, the data can either save your company from heading in the wrong direction or uncover opportunities that help you not only keep up with the competition but also stay ahead of the pack in a competitive market.

Furthermore, I’ve gathered eight reasons why data preparation should a top priority for your organization:

- Improved data quality: Allowing for more accurate, consistent, and complete data.

- Better decision-making and accurate insights: High-quality, well-prepared data provides a solid foundation for making data-driven decisions and reduces the risk of making decisions based on faulty or incomplete information. You get accurate and reliable insights, revealing trends and patterns that may otherwise be obscured by noise or errors.

- Lower costs: Automating and streamlining data preparation tasks reduces the time spent on manual, repetitive work and reduces cloud computing costs.

- Easier reproducibility and higher transparency: Proper documentation of data sources, transformation steps, and cleaning processes ensures transparency and allows others to reproduce the analysis, leading to more credible and trustworthy results.

- Enhanced data governance and compliance: Proper data handling and documentation help ensure compliance with data protection regulations and standards, protecting sensitive information and preventing data breaches.

- Increased scale and flexibility: Automated and well-structured data preparation processes can easily scale to handle larger and more complex datasets

- Smoother collaboration and communication: Clear documentation and standardized practices facilitate better communication and collaboration among team members. Prepared data that is accessible and understandable by different departments or stakeholders promotes cross-functional insights and initiatives.

- Building a foundation for AI and ML: Properly prepared data is essential for training effective machine learning and AI models. High-quality data enables advanced analytical techniques, such as predictive modelling, clustering, and natural language processing, to yield more accurate and actionable results.

Five steps in data preparation

With the right tools, data processing becomes a breeze. Here are five simple data preparation steps for you to follow:

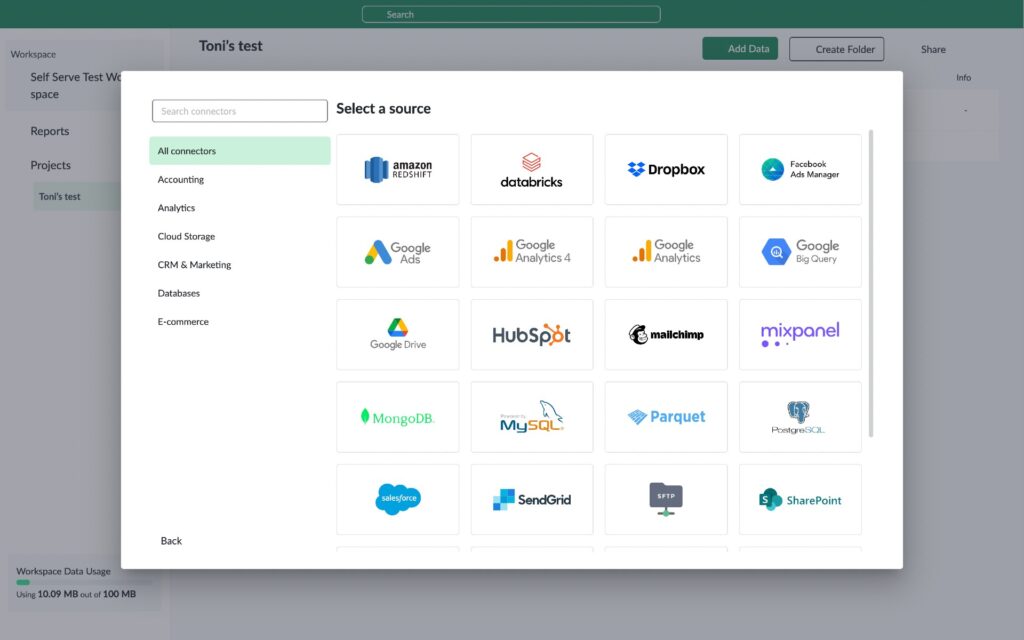

1. Connect and collect: Bring your data into one place

As a starting point, you need to be able to gather data from various sources such as databases, spreadsheets, APIs, and other external datasets.

This allows you to view and explore all the relevant data that will end up in the report in one place and side by side. Having all the data together allows you to ensure that it is relevant and sufficient for the analysis objectives and explore it meaningfully.

With Mammoth, you can upload Excel and csv files and connect databases like MySQL and Postgres for free. For the full list of connectors, see our ready-made integrations.

2. Explore: Investigate and analyze your data

Getting a sense of what the data is all about is a key step in data preparation.

This involves conducting an initial review of the data to understand its structure, content, and quality. Another objective for exploration is to identify patterns, anomalies, and key characteristics of all the data.

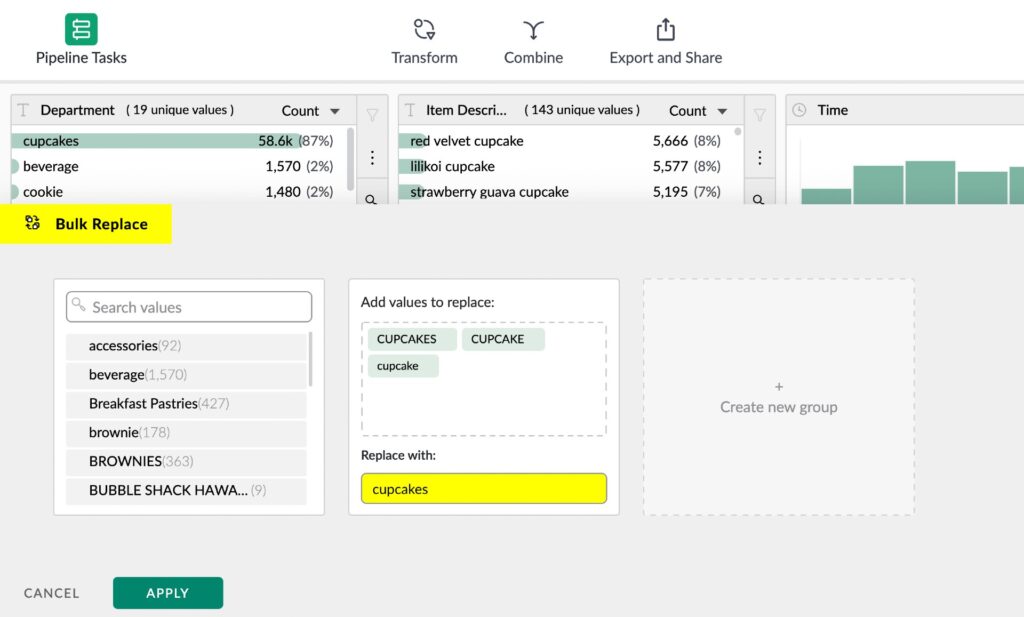

3. Transform: Cleanse, reshape, and blend your data into shape

To clean or transform your data, you need either the right technical skills (SQL and Python are the most common programming languages for data manipulation) or the right tools to do the heavy lifting for you.

Some of the issues to address at this stage are handling missing values, removing duplicates, correcting errors, and detecting outliers.

Sometimes, you need to blend and integrate your data, which involves combining data from multiple sources into a cohesive dataset and ensuring its consistency. The above practices ultimately help with data normalization, standardization, aggregation and enrichment.

With Mammoth, your whole team will be able to create and manage data cleaning and transformation tasks with an intuitive visual editor.

4. Automate: Build a data pipeline for visibility, maintenance and repeatability

In many cases, data cleansing needs to happen on recurring data.

Even if this cleansing is a one-off, it requires multiple iterations to get it right.

To manage recurring data or multiple iterations of maintenance, a manageable data pipeline where all your transformations are recorded is key. This practice helps provide context and facilitate reproducibility.

5. Govern: Allow for easy audit and maintenance

Good, trustworthy data brings about confidence in decision-making.

This trust is created by the ability to easily trace and investigate various aspects of the data journey, including an audit trail of how, when, and by whom the data was transformed and the ability to make changes seamlessly.

These steps help you ensure that the data is accurate, reliable, and suitable for the intended analysis, ultimately leading to more meaningful and actionable insights.

The most common challenges in data preparation

Implementing proper data preparation practices in your organization can be challenging due to a variety of factors.

Here are some of the key challenges our clients have come across:

- Data quality issues: Missing values or variability in data formats, units, and standards can help analysis and lead to correct insights. Redundant records can inflate datasets and skew analysis.

- Data silos and disparate data: Different departments or units may use separate systems, making it difficult to integrate data. Sometimes poor interdepartmental communication can lead to fragmented data management practices.

- Complex and large data sources: Handling structured, semi-structured, and unstructured data from multiple sources requires sophisticated tools and expertise. Large datasets demand significant computational resources and efficient processing techniques.

- Technical challenges: Integrating various data preparation tools and platforms can be complex. Converting raw data into a usable format requires specialized skills and can be resource-intensive.

- Skill gaps: Staff with insufficient knowledge of data preparation techniques can engage in suboptimal practices. Continuous education and training are necessary to keep up with evolving tools and methodologies.

- Data governance: Ensuring data handling practices comply with regulations (e.g., GDPR, HIPAA) adds complexity. Clearly defining who is responsible for data quality and management can take time and effort.

- Resource constraints: There are high costs associated with acquiring and maintaining data preparation tools and hiring skilled personnel. Proper data preparation is time-consuming, and organizations often rush this phase to move quickly to analysis.

Are you looking for a data preparation tool for your organization?

Get Started, and turn your data into meaning insights.

Future: Data preparation for AI and machine learning

Data preparation in machine learning and clean data serving AI models have become some of the most frequent topics in Mammoth’s customer discussions.

Companies from startups to enterprises are wondering how to better make sure their AI-powered applications get accurate data.

Here are some of the key reasons why clean data and good data preparation practices are essential in AI-powered services:

- Data preparation helps ensure the accuracy of AI models. Noise and errors in the data can lead to incorrect predictions and poor performance.

- Processing well-prepared data requires fewer computational resources. Cleaning data at a later stage during the AI model development can be time-consuming, so having clean data from the start can save time and effort.

- AI models trained on clean data are more likely to generalize well to new, unseen data. Dirty data can lead to overfitting, where the model performs well on training data but poorly on real-world data.

- Clean data helps in reducing biases that can arise from incorrect or incomplete data. Biased data can lead to biased AI models, which can perpetuate unfairness.

- Well-prepared and clean data increases the reliability and robustness of AI models, which are essential for critical applications like healthcare, finance, and autonomous driving.

- Clean data aids in making the models more interpretable and understandable. It is easier to analyze and draw insights from clean data, facilitating better decision-making by the AI models.

To summarize, clean data is fundamental to the success and efficacy of AI models, impacting their performance, reliability, and fairness.

Are you looking for a data preparation tool for your organization? Get Started today, and get your data ready for business-critical reporting, machine learning, and AI models.